Ingeotec at Rest-Mex: Bag-of-Words Classifiers

IberLEF 2023, September 2023, Jaén, Spain

INFOTEC

CentroGEO

INFOTEC

INFOTEC

INGEOTEC research group

GitHub: https://github.com/INGEOTEC

WebPage: https://ingeotec.github.io/

Our approach: EvoMSA 2.0

EvoMSA’s documentation (https://evomsa.readthedocs) Papers (Graff et al. 2020) (Tellez et al. 2017)

Our representations

- Sparse bag of words (SBOW)

- Dense bag of words (DBOW)

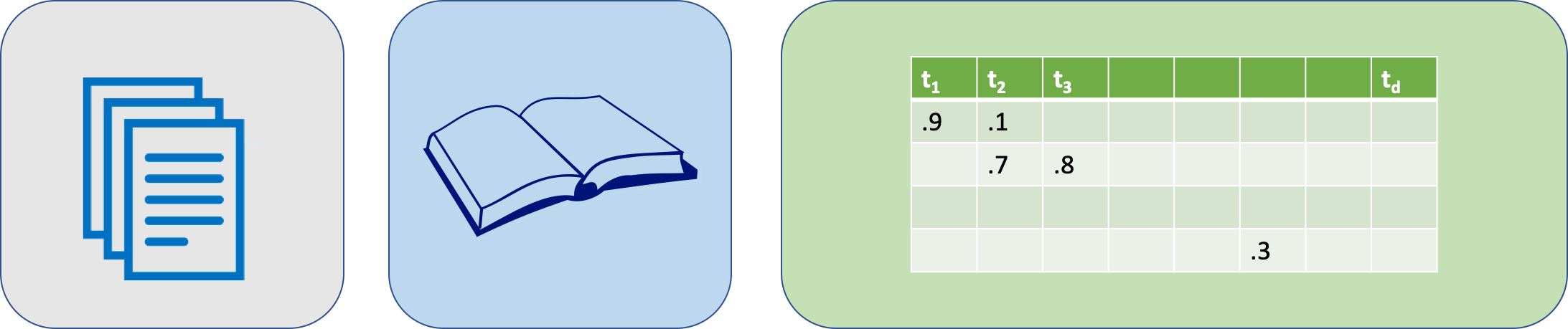

SBoW

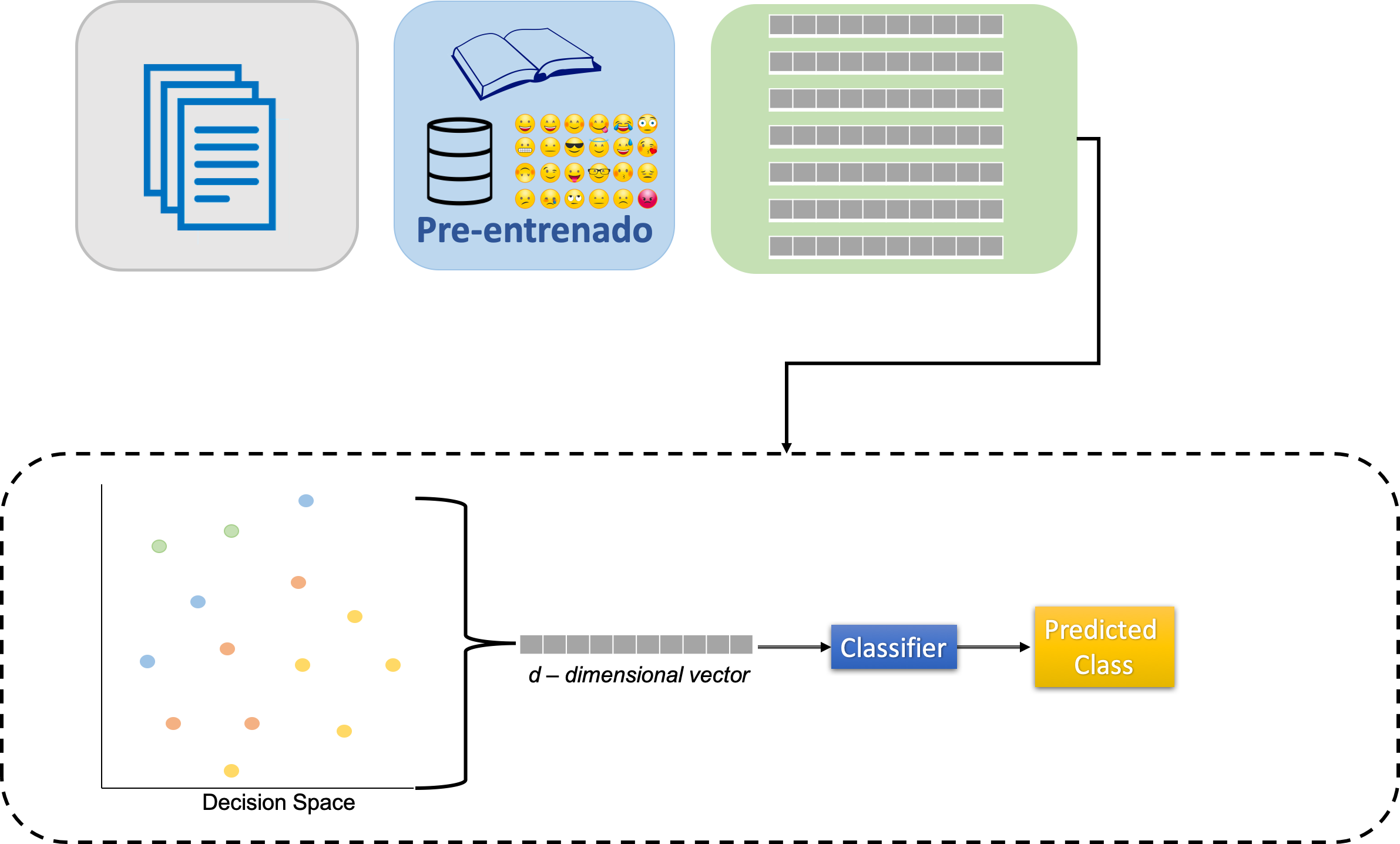

Dense BoW (stacking)

Stacking: All models aggregated are used according to their weights for producing an output, the final classification.

DBOW parameters

Following an equivalent approach used in the development of the pre-trained BoW, different dense representations were created.

These correspond to varying the size of the vocabulary and the two procedures used to select the tokens. Vector spaces:

- dataset is in \(\mathbb R^{57}\).

- emoji is in \(\mathbb R^{567}\).

- keyword is in \(\mathbb R^{2048}\).

Configurations

We tested 13 different algorithms for each task. The configuration having the best performance was submitted to the contest. The best performance was computed using k-fold cross-validation (\(k=5\)).

Configurations

The different configurations tested in this competition are described below. These configurations include BoW and a combination of BoW with dense representations. Stack generalization combines the different text classifiers, and the top classifier was a Naive Bayes algorithm. The specific implementation of this configuration can be seen in EvoMSA’s documentation.

Results

| Configuration | Type | Country | Polarity |

|---|---|---|---|

bow_training_set |

0.9802 | 0.9260 | 0.5179 |

bow |

0.9793 | 0.9194 | 0.5167 |

stack_3_bows |

0.9793 | 0.9225 | 0.5603 |

bow_voc_selection |

0.9792 | 0.9200 | 0.5152 |

stack_3_bow_tailored_all_keywords |

0.9783 | 0.9166 | 0.5467 |

stack_3_bows_tailored_keywords |

0.9783 | 0.9164 | 0.5448 |

stack_bows |

0.9782 | 0.9167 | 0.5605 |

stack_2_bow_tailored_keywords |

0.9773 | 0.9097 | 0.5448 |

stack_2_bow_tailored_all_keywords |

0.9773 | 0.9101 | 0.5446 |

stack_2_bow_keywords |

0.9769 | 0.9076 | 0.5420 |

stack_2_bow_all_keywords |

0.9768 | 0.9076 | 0.5431 |

stack_bow_keywords_emojis |

0.9743 | 0.8951 | 0.5310 |

stack_bow_keywords_emojis_voc_selection |

0.9742 | 0.8949 | 0.5346 |

Performance, in terms of F1, of different configurations on a five fold cross-validation. The best performance is in boldface.

Competition

| Type | Country | Polarity | |

|---|---|---|---|

| Winner | 0.9903 | 0.9420 | 0.6217 |

| INGEOTEC | 0.9805 | 0.9271 | 0.5549 |

| Difference | 1% | 1.6% | 12.0% |

Other competitions performance

| Competition | Winner | EvoMSA 2.0 | Difference |

|---|---|---|---|

| PoliticEs (Gender) | 0.8296 | 0.7115 | 16.6% |

| PoliticEs (Profession) | 0.8608 | 0.8379 | 2.7% |

| PoliticEs (Ideology Binary) | 0.8967 | 0.8913 | 0.6% |

| PoliticEs (Ideology Multiclass) | 0.6913 | 0.6694 | 3.3% |

| REST-MEX (Polarity) | 0.6216 | 0.5548 | 12.0% |

| REST-MEX (Type) | 0.9903 | 0.9805 | 1.0% |

| REST-MEX (Country) | 0.9420 | 0.9270 | 1.6% |

Other competitions performance (Continued…)

| Competition | Winner | EvoMSA 2.0 | Difference |

|---|---|---|---|

| HOMO-MEX | 0.8847 | 0.8050 | 9.9% |

| HOPE (ES) | 0.9161 | 0.4198 | 118.2% |

| HOPE (EN) | 0.5012 | 0.4429 | 13.2% |

| DIPROMATS (ES) | 0.8089 | 0.7485 | 8.1% |

| DIPROMATS (EN) | 0.8090 | 0.7255 | 11.5% |

| HUHU | 0.820 | 0.775 | 5.8% |

Conclusions

We used our EvoMSA framework to merge different internal model outputs to solve the Rest-Mex 2023 challenge; these models are primarily large pre-trained and locally trained vocabularies capturing lexical and semantic features along with linear SVM.

- Our system is based on the ensemble of multiple models using stacked generalization, more precisely with our EvoMSA framework.

- We obtained competitive models compared with more complex and expensive deep learning approaches.

- Our approach uses lexical and semantic features, all computed as bags of words (dense and sparse).

- We achieved an F1 score of 0.9805, 0.9271, and 0.5549, for type, county and polarity, respectively.

- Results with a low difference from 1% to 12% to the winner solution.

Conclusions (continued…)

- Our results show that developing competitive models for violent event identification is possible using only text-based features and, even more, bag-of-words-based models.

- Explainability of the model (with a simple bow outstanding results). Simplest solution

- Fast solution (in training and test), low computational resources. Dense representation using at most 100 million tweets.

Thanks

Questions?

Also, we want to promote the usage of our EvoMSA library.

For EvoMSA documentation see:

https://evomsa.readthedocs.io/en/docs/

EvoMSA Github repository

https://github.com/INGEOTEC/EvoMSA